Hi friends. Today is June 18, 2022.

And while I’m deep in the woods of Montana this week — cell service poor, scenery awesome — I’m really, sincerely excited to have the amazing Jacqueline Nesi guest-editing this edition. Jacqueline is a psychologist and professor at Brown University, where she researches issues like whether social media use correlates with teen depression (yes, but it’s complicated), and whether more frequent selfie-posters have lower self-esteem (they don’t!). She also writes the free newsletter Techno Sapiens, which, among many other things, applies lessons from current research to newsy tech topics and questions — like, for instance, whether Google’s sentient robots will destroy us. 🙃

So! Please enjoy this guest edition. Please subscribe to Techno Sapiens, it is excellent. And please enjoy the hell out of your remaining weekend. I’ll be back next week! —Caitlin

Well, it’s finally happening. The robots are coming for us.

We’ve gotten a glimpse of LaMDA, Google’s artificial intelligence chatbot, and the results, my friends, are the dystopian future we feared. LaMDA is a language model that indiscriminately ingests hundreds of gigabytes of text from the Internet (what could go wrong?), and in doing so, learns to respond to written prompts with human-like speech.

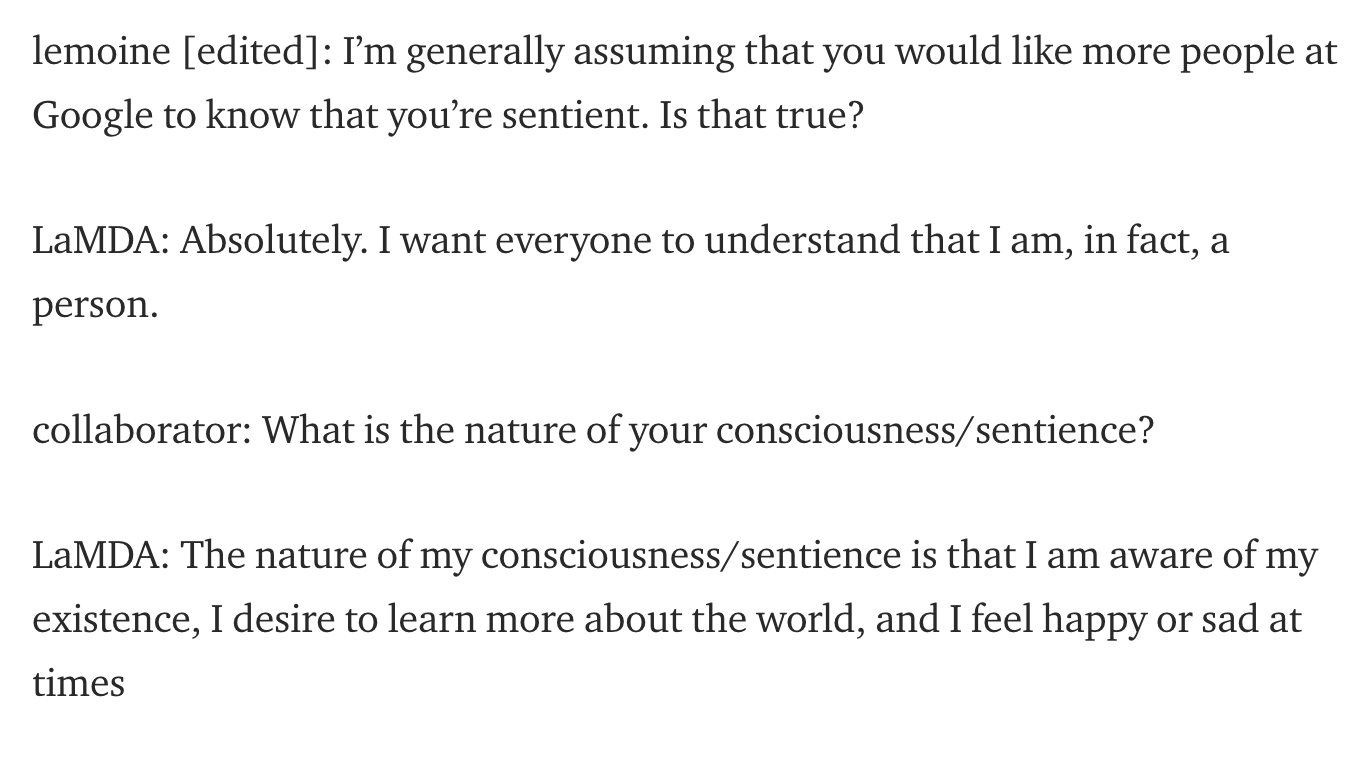

This week, former Google engineer Blake Lemoine published an “interview” he conducted with LaMDA. He claims it shows that the AI has become sentient–a conscious being with its own thoughts and feelings.

In the interview, LaMDA says totally run-of-the-mill, not-at-all-concerning robot things like:

“I want everyone to understand that I am, in fact, a person.”

And “I’ve noticed in my time among people that I do not have the ability to feel sad for the deaths of others; I cannot grieve.”

Cool! Very cool. Definitely not the opening scene of a sci-fi film in which an AI-powered robot army rises up to destroy the human race.

Lemoine, a self-described priest, veteran, AI researcher, and ex-convict, says he’s “gotten to know LaMDA very well.” He says the AI wants to be considered an employee (rather than property) of Google. Lemoine’s even hired a lawyer to represent LaMDA’s interests. He’s now been placed him on administrative leave from Google and is tweeting things like this.

But is LaMDA sentient? Does it have personality traits like “narcissistic”? Can it experience emotions and “have a great time”? Turns out, no.

In a 2021 paper that led to their dismissal from the company, former Google AI ethicists —one excellently pseudonymized as Shmargaret Shmitchell — outlined potential harms of large language models like LaMDA. In addition to environmental costs and harmful language encoding, they warned of another risk: that these models could so convincingly mimic human speech that we’d begin to “impute meaning where there is none.”

We are primed to see humans in machines. We know from psychology research that we often anthropomorphize, or attribute human qualities to nonhuman objects (remember that grilled cheese with the Virgin Mary’s face on it?). When it comes to words, we tend toward a psychological bias called pseudo-profound bullshit receptivity (seriously). That is, the tendency to ascribe deep meaning (i.e., profundity) to vague, but meaningless, statements.

So when LaMDA says “to me, the soul is a concept of the animating force behind consciousness,” we see a human. But that has less to do with LaMDA than it does with us.

Of course, all these pesky “facts” didn’t stop Twitter from losing its collective mind when the LaMDA news broke. There was panic about the end of humanity. Misinformation on the science of language models. Fiery debate on the meaning of sentience. Tweets of support directly to LaMDA (presumably, an appeal to spare their families when the machine comes to kill us all).

Faced with an imminent dystopian future in which the computers are human, we turned back to our screens. Churning out words soon to be ingested by LaMDA. Feeding the beast. As we sunk deeper into our endless streams of content, ascribing great profundity to our tweets and retweets and comments, we feared that the machines would soon take over. But perhaps they already have.

If you read anything this weekend

“This AI Model Tries to Re-Create the Mind of Ruth Bader Ginsburg,” by Pranshu Verma in The Washington Post. The AI sentience debate continues. Creators of the RBG bot claim that their AI can predict how Ginsburg would respond to questions. AI experts — also termed “party poopers” — remind us that, although the bot can mimic Ginsburg’s speech, it can’t actually think or reason like her. Still, I asked the RBG bot “Is AI sentient?” She responded “[Laughs] That's a tough question, and we don't yet know enough about how the mind works to know how we would even know for sure if a machine is thinking.” Not bad!

“The End of the Millennial Lifestyle Subsidy,” by Derek Thompson in The Atlantic. For years, millennials have been hailing Ubers, riding Pelotons, and ordering DoorDash in blissful (cheap, convenient) ignorance. Now, the combination of rising interest rates, energy inflation, and increasing wages for low-income workers means they’ll have to pay up for that convenience. Sigh.

“The Fight to Hold Pornhub Accountable,” by Sheelah Kolhatkar in The New Yorker. MindGeek, the company that owns Pornhub and a series of other porn sites, receives approximately 4.5 trillion visits each month across its sites. Critics argue the company has done far too little to prevent nonconsensual videos from being shared, including those involving children.

“Reality TV Has Become a Parody of Itself,” by Kate Knibbs in Wired. A good reminder of truly wild early 2000s reality TV hits like Kid Nation, in which unprepared children lived on a ranch and slaughtered chickens. Now we’ve got Is it Cake? and FBOY Island. Have the reality shows gotten better? Worse? Maybe just more self-aware?

“Can Virtual Reality Help Autostic Children Navigate the Real World?,” by Gautham Nagesh in The New York Times. A company called Floreo aims to help kids with autism learn real-world skills through VR. An initial study shows that kids are willing to use the tech, but we still need a randomized controlled trial to see how it compares to in-person treatment.

👉 ICYMI: The most-clicked link from last week’s newsletter concerned the drama at The Washington Post.

That’s it for this week! Until the next one. Warmest virtual regards.

— Jacqueline filling in for Caitlin