Actually, the internet's always been this bad

A new study considers 30 years of comments, with surprising conclusions

The internet is a festering, antisocial hellscape that has only gotten worse with time. That claim is held so widely — and so unequivocally — that it's become a ground truth of life online.

But a fascinating new study out today in Nature complicates the presumption that online discourse is bad and worsening. A team of Italian researchers evaluated more than half a billion comments spanning 30 years, and concluded that online discourse is no more "toxic" today than it was in the early 1990s.

"The toxicity level in online conversations has been relatively consistent over time, challenging the perception of a continual decline in the quality of discourse," said Walter Quattrociocchi, one of the study's authors, in an email. "While the platforms and how we use them have evolved, human behaviors in these spaces have remained surprisingly stable."

Given the noxious virtual muck we all flail around in, some of you may well be wondering if Quattrociocchi — the head of the Data and Complexity for Society Lab at Rome's Sapienza University — is, in fact, for real. Does he know about QAnon? Kiwifarms? LibsofTikTok? True crime fanatics or Star Wars stans? Has he never seen Nextdoor after a bike lane installation? Has he logged into Twitter since it became X?!

Reader, he has. (Or, well — I assume; I did not specifically ask.) Quattrociocchi and his team aren't denying the toxicity of the social internet.

Instead, they find that early online conversations — far from avoiding the negativity and combativeness that we associate with modern social media — actually followed similar patterns.

It's not the internet, babe. It's human nature.

You don't have to go home, but…

To come to that rather dispiriting conclusion, Quattrociocchi and his team analyzed half a billion comments from eight platforms between 1992 and 2022. To determine the toxicity of any given comment, they ran them through Perspective AI, an automated classifier tool also used by a number of social platforms and major publishers.

Perspective AI claims to identify speech that is "rude, disrespectful or unreasonable" and "likely to make someone leave a discussion" — a squishy definition, admittedly, but one that definitely encompasses a broad range of objectionable speech and behavior. After scoring the toxic comments, researchers then looked at how toxicity accumulated across conversations, from '90s Usenet to 2010s YouTube and Twitter.

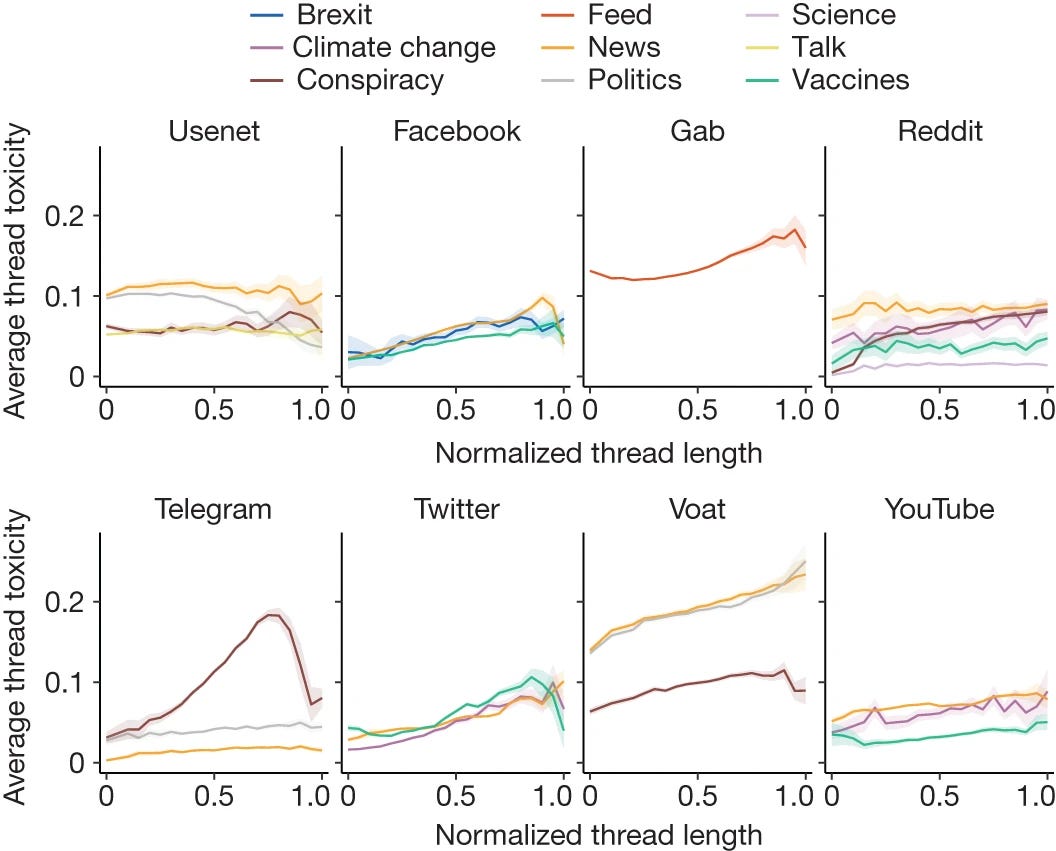

Those patterns proved surprisingly consistent across time and platforms: Overall, the study found that the prevalence of both toxic speech and highly toxic users were extremely low. But the longer any conversation goes on, on virtually any platform, the more toxic it becomes. At the same time, conversations tend to involve fewer, more active participants as they stretch on.

It's like a certain kind of Buffalo bar around last call: Most people have gone home for the night, but the stragglers are loud, uninhibited and prone to a certain level of mischief and/or aggression.

"Longer conversations potentially offer more opportunities for disagreements to escalate into toxic exchanges, especially as more participants join the conversation," Quattrociocchi said.

Everything in moderation

That finding held true across seven of the eight platforms the team researched. By and large, those platforms also exhibited similar shares of toxic comments. On Facebook, for instance, roughly 4 to 6% of the sampled comments failed Perspective AI's toxicity test, depending on the community/subject matter. On YouTube, by comparison, it's 4 to 7%. On Usenet, 5 to 9%.

Even infamously lawless, undermoderated communities like Gab and Voat didn't fall so far from the norm for more mainstream platforms: About 13% of Gab's comments were toxic, the researchers found, and between 10 and 19% were toxic on Voat.

There's something deeply unfashionable and counterintuitive about all of this. The suggestion that online platforms have not single-handedly poisoned public life is entirely out of step with the very political discourse the internet is said to have polluted.

In recent years, U.S. and European lawmakers have sought to regulate online speech by compelling platforms to take action on dangerous, false or otherwise toxic content, ranging from hate speech to vaccine disinformation.

That narrative reached something of a high point in the U.S. last week, when the House voted to ban TikTok in part over concerns that the app fosters misinformation and prejudice. There is something unique to the design and ownership of this specific app, the logic goes, that makes it especially toxic.

Quattrociocchi said it would be a mistake to assume his team's findings suggest that moderation policies or other platform dynamics don't matter — they absolutely "influence the visibility and spread of toxic content," he said. But if "the root behaviors driving toxicity are more deeply ingrained in human interaction," than effective moderation might involve both removing toxic content and implementing larger strategies to "encourage positive discourse," he added.

Toward less toxic spaces

Consider Reddit's science communities, which contained the least toxicity (a mere 1%!) of all the communities this study considered. The site's largest science subreddit, r/science, is a masterclass in proactive moderation. More than 1,000 volunteer mods, many of them scientists, filter out untrustworthy or dated studies, personal anecdotes and off-topic or offensive comments, among other stuff.

But the virtuous culture of r/science also goes beyond the content mods remove: The group also invites a certain standard of conversation by verifying credentialed scientists, reinforcing its norms often (and automatically), and taking firm positions against things like climate change denial and anti-vax conspiracies.

It's worth noting two limitations of this paper: By its authors' own admission, it doesn't quite capture the disruptive effect of coordinated trolling campaigns, which can be far less predictable than individual behavior. The study also only considered text-based media, at a time when a great deal of online discourse plays out in videos.

If its findings still feel off to you, there might also be another culprit, Quattrociocchi said: People feel that online discourse has gotten worse because the growth of social media platforms, and the ease of commenting and sharing there, has increased the visibility of toxic content.

That creates "a bias in our perception of overall discourse quality," he said.

So: Happy posting! It's not all that bad.

Further reading

"Why the Past 10 Years of American Life Have Been Uniquely Stupid," by Jonathan Haidt for The Atlantic (2022)

"Every 'Chronically Online' Conversation Is the Same," by Rebecca Jennings for Vox (2022)

"So, You Want Twitter to Stop Destroying Democracy," by Katherine Cross for Wired (2022)

"How to Fix the Internet," by Katie Notopoulos for MIT Technology Review (2023)

"The Future of Free Speech, Trolls, Anonymity and Fake News Online," by Lee Rainie, Janna Anderson and Jonathan Albright for Pew Research Center (2017)

Weekend preview

I'm currently reading about the fall of Pitchfork and litblogs, the rise of celebrity podcasts, and the nine ways mifepristone bans "hurt everyone." (Plz see the first entry, miscarriage care, which we previously discussed in November.)

Until the weekend! Warmest virtual regards,

Caitlin

P.S. Did you ever belong to a Usenet newsgroup about politics? If so, I’d be curious to hear about it!

I like the perspective, yet I would like to add that 1990s Internet wasn't nearly as monetized as it is now. Therefore the incentive to keep people on websites (and algorithms rewarding disagreement - see Jaron Lanier's arguments on social media) wasn't as high. Yes, that means more visibility as already pointed out, but also that parts of the Internet have been actively designed to be worse!

I was not on any political newsgroups in the '90s but I was on Usenet. Google Groups used to support Usenet (sunset February 2024) and about five or six years ago, I went searching for myself and hoo boy, the CRINGE of my posts. I was so young.